While large language models (LLMs) demonstrate remarkable capabilities across general tasks, they often lack the depth and nuance required for specialized domains. Techniques like Retrieval-Augmented Generation (RAG) can provide context but do not enable the LLM to replicate the complex reasoning of Subject Matter Experts (SMEs). This underscores the crucial role of SMEs in enhancing LLM performance.

Companies like Harvey, Trellis Law, Sixfold, and many others are already leveraging SMEs to augment the quality of their LLM products with expert validation on domain-specific tasks. In this post, I'll cover a tactical strategy for using SME annotations to continually improve LLM performance.

Tactical Strategies to Improve LLM Experimentation with SME Annotations

You are already reviewing LLM outputs by eye, i.e., "vibe checks." To benefit from these insights, you should make your thought process explicit and codify it using custom grading criteria (i.e., continuous or categorical scoring). From annotations, you can unlock interesting downstream improvements:

Hill-climbing as a collaboration between SMEs and Eng:

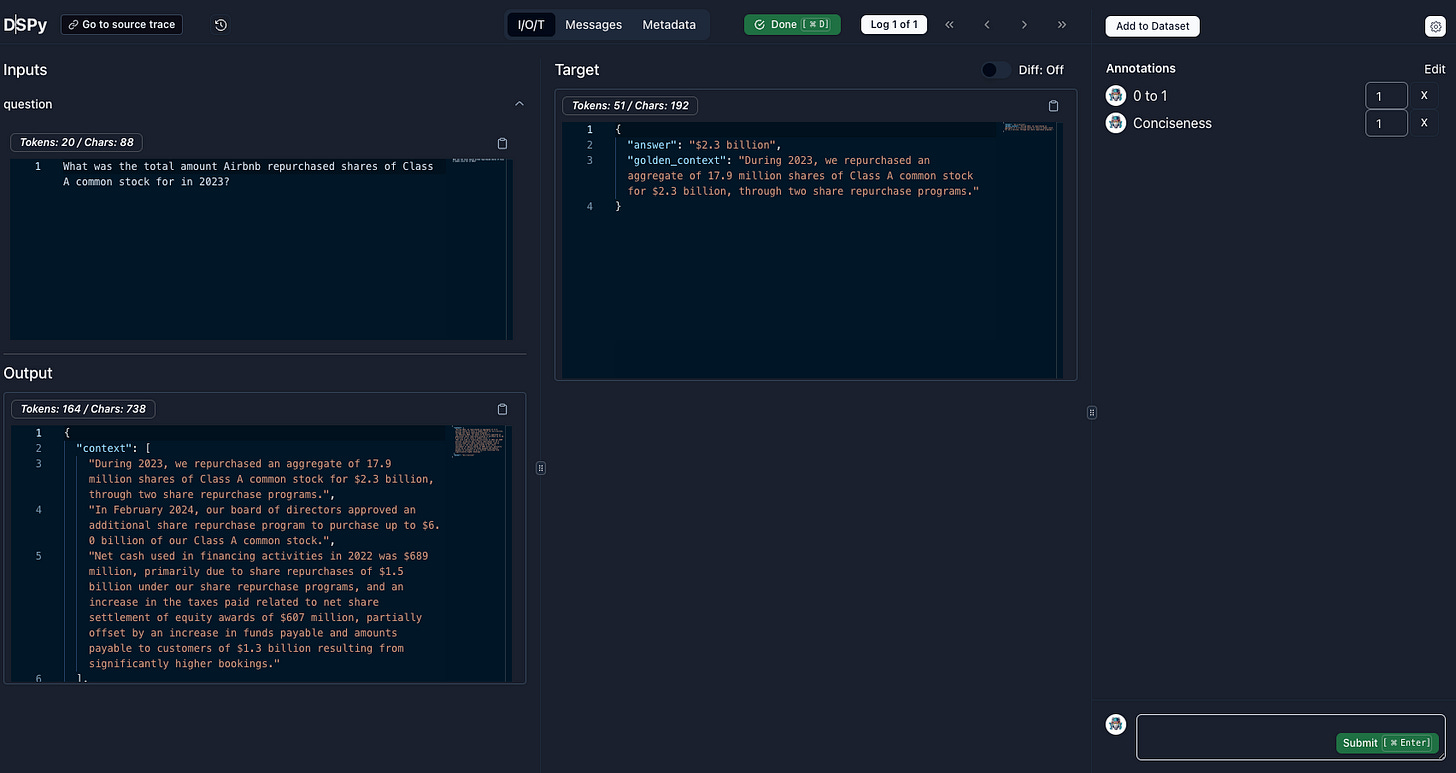

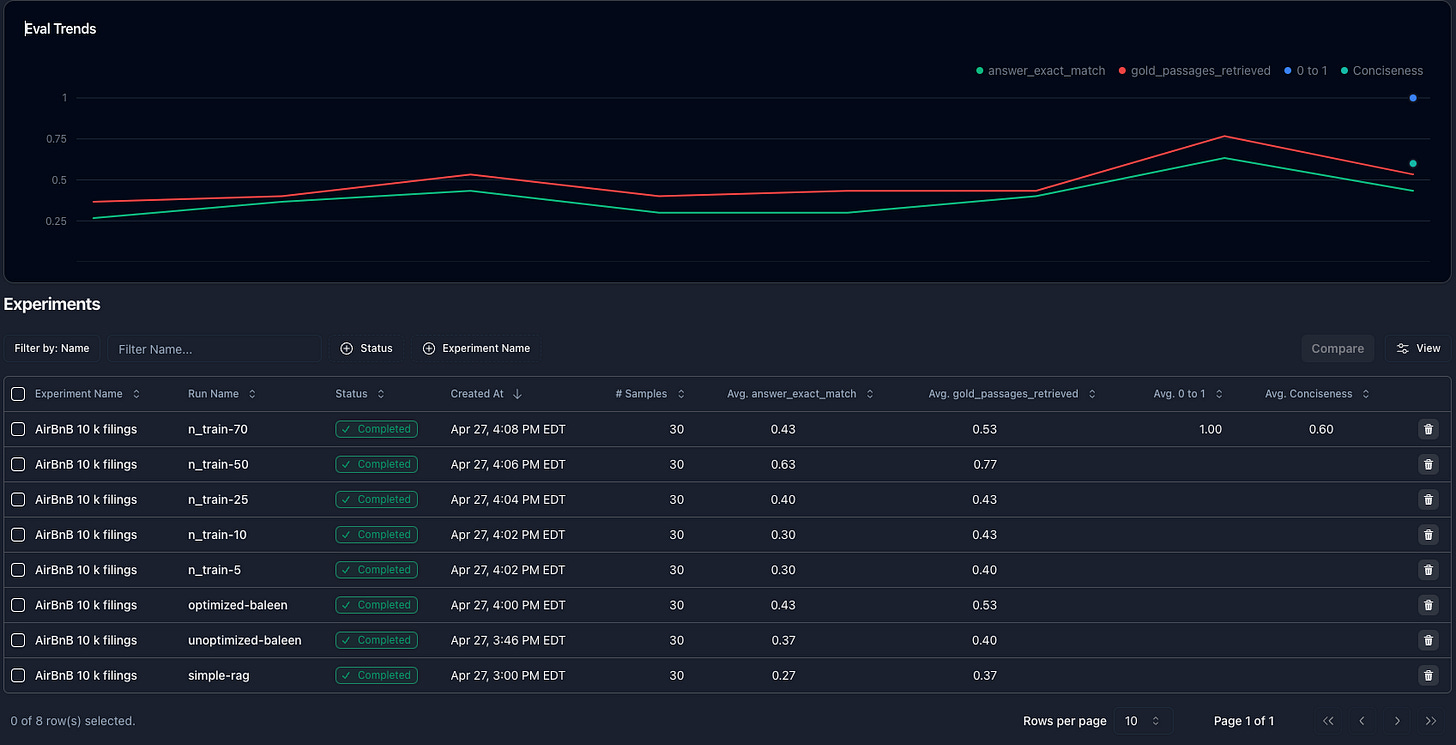

For many tasks, there is no single correct answer but an ever-improving target. Something we've seen work at Parea is customers who have SMEs grade LLM experiments using our Annotation Queue.

The engineering team then continually uses the feedback and scores to improve their LLM pipeline. Scores are automatically associated with the specific LLM call and synced across the platform. Every time an SME identifies a great LLM response, that data point is set, and you can then create a new "target answer" for your LLM judge evaluations.

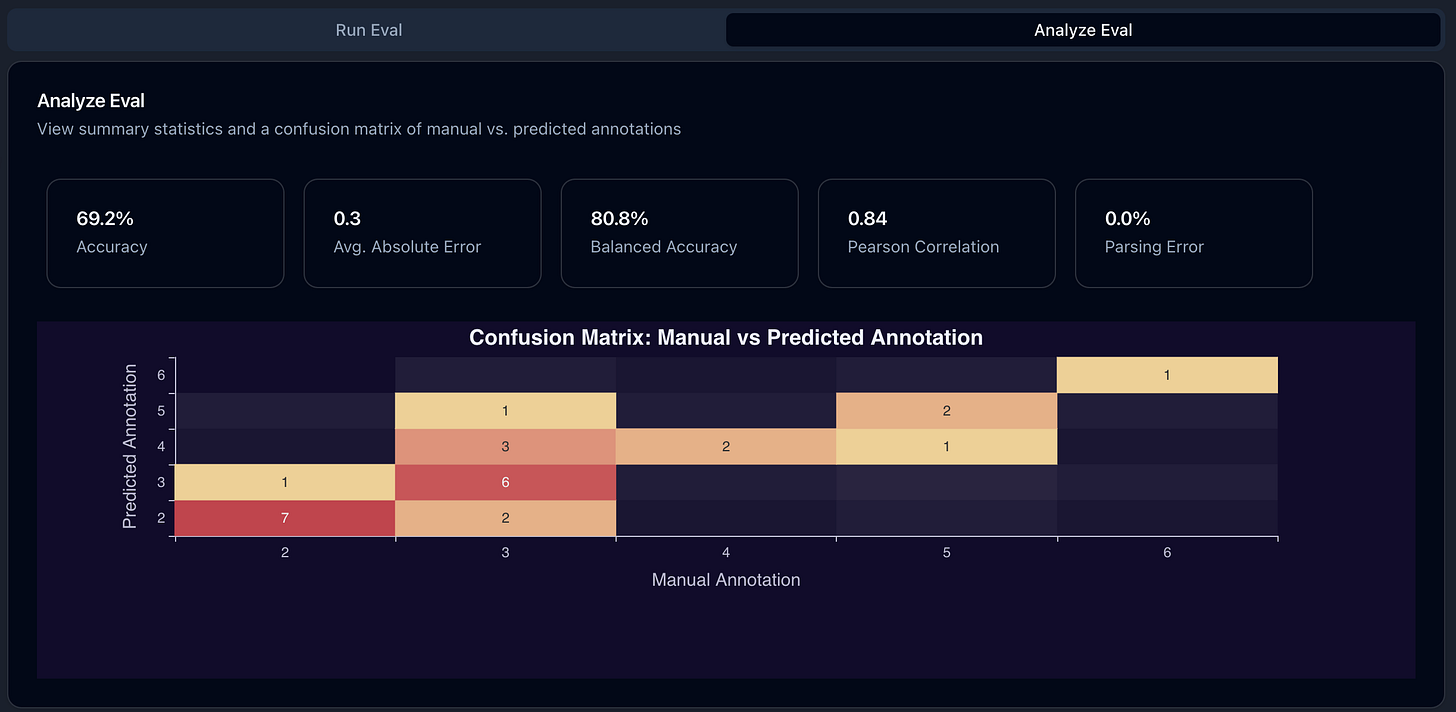

Bootstrapped Evaluations aligned with Expert Judgement:

With Parea, you can take as few as 20-30 manually annotated samples from your SMEs and use the eval bootstrapping feature to create a custom evaluation metric aligned with your annotations.

To take this to the next level, once you have these new evaluation metrics, you can use tools like Zenbase+Parea to optimize your prompts to maximize this new eval!

Conclusion

Integrating domain expertise can accelerate LLM development, helping to codify domain expertise into reliable LLM products.

“I may venture to affirm of the rest of mankind, that they are nothing but a bundle or collection of different perceptions, which succeed each other with an inconceivable rapidity, and are in a perpetual flux and movement.” — David Human, A Treatise of Human Nature